Prompt Engineering laid the foundation, but we're ready for what's next. While Prompt Engineering has been the talk of the town lately, Anthropic's recent article got me thinking differently. As a product designer working with AI, I've realized that Context Engineering has actually been here all along, we just weren't calling it that. Now that the world is comfortable with prompts, it seems the next frontier lies in something more fundamental: the cognitive architecture of our AI agents.

In my time of designing AI experiences, I've come to understand that this is our foundational challenge. What truly sets apart a next-gen AI experience isn't just clever prompting , it's the agent's capacity for what I call omni-context awareness. Think about those magical moments when an AI seems to truly get you, maintaining that golden thread of understanding across your entire relationship. These "Wow moments" we chase? They're not built on faster processing speeds or fancier algorithms. They're built on smarter memory. And the tools we give our AI agents? They're not just functions anymore; they're more like cognitive extensions, defining how the agent understands and remembers its ongoing dialogue with users.

Designing for the cognitive burden

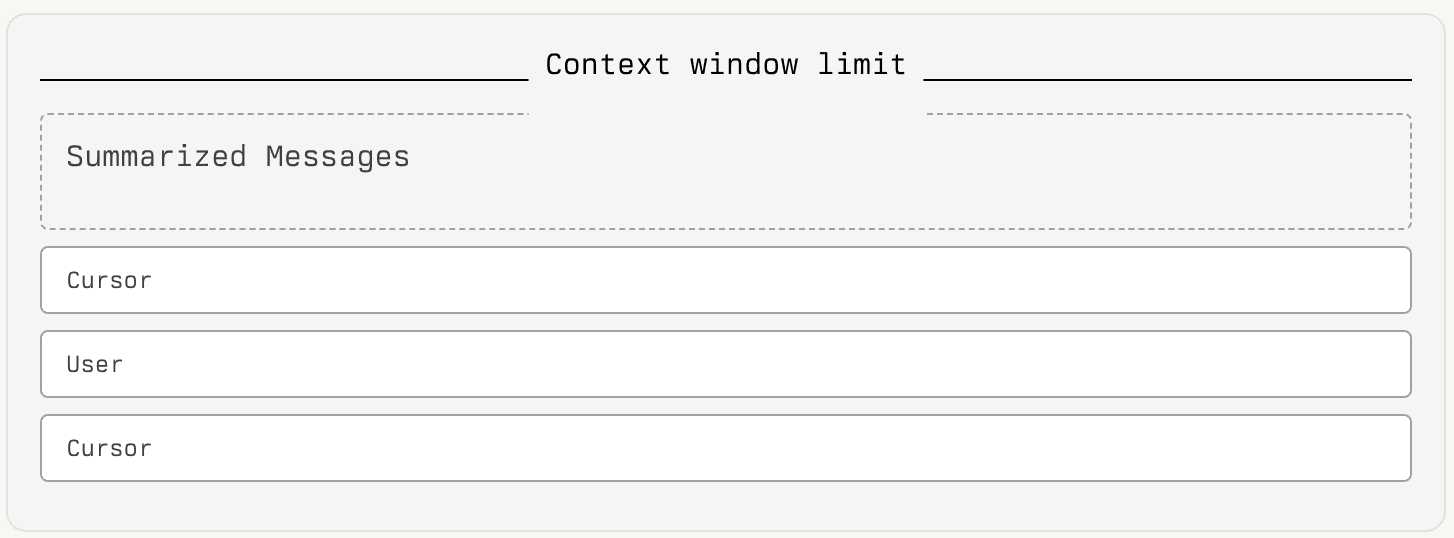

Let's talk about something that fascinates me: the context window. Our Staff Engineer recently shared an incredible resource (Context Window Architecture) that completely changed my understanding of this concept. It's not just some technical specification—it's more like an AI's working memory, a finite cognitive budget that shapes everything about how it thinks and responds. The more I studied the architecture diagrams and examples, the more I realized how crucial this cognitive budget is to designing effective AI interactions.

Sure, modern models like Claude Sonnet 4 boast impressive capacities of up to 1-million tokens, but here's the thing I learned from diving deep into context window architecture: bigger isn't always better. In fact, a larger context window just gives us more room for noise. It's like having a bigger desk , you might have more space, but that doesn't automatically make you more organized. When we exceed this cognitive budget, something fascinating happens: the agent experiences what we call context rot. Its reasoning becomes fuzzy, its responses less coherent and most importantly, its very identity starts to fragment.

Here's where it gets interesting: to solve this, we actually need to embrace what I call a "forgetting-first" philosophy. Yes, you read that right , sometimes the key to better AI isn't about remembering more, but about forgetting better.

Noise dulls intelligence, whether human or artificial. The art is in the forgetting.

This insight struck me because it perfectly mirrors how our own brains work. Just think about it , your brain doesn't record every single detail of your day like some endless surveillance camera (well, at least not yet , that Black Mirror episode "The Entire History of You" from 2011 seems to have cracked that particular dystopian future, but I digress). Instead, it's constantly making executive decisions about what to keep and what to let go. It's this strategic pruning and synthesis that helps form our stable sense of self.

This forgetting-first approach transforms how we think about AI memory architecture... we design systems that forget strategically from the start– guided by clear objectives about what success looks like for that particular agent.

Looking at the context window architecture, I believe this strategic curation may be the key. When we help our AI agents forget intelligently, their internal state should stay focused on what's truly necessary for their purpose, rather than getting bogged down by every detail from past conversations. While I haven't had the chance to experiment with this approach yet, I'm excited about its potential. The theoretical result? An agent that not only makes better decisions but maintains a more stable, predictable personality , something that's absolutely crucial for creating trust with users.

As designer, I can't help but see parallels with some fundamental UX laws here. The Working Memory law from Laws of UX immediately comes to mind, we know users perform better when they don't have to remember too much information at once. I suspect the same principle applies to our AI agents. And when you think about it, the Aesthetic-Usability Effect and Jakob's Law might be just as relevant for AI interactions as they are for human ones. After all, an agent with a coherent, predictable personality is likely to be perceived as more aesthetically pleasing and trustworthy, just like any well-designed interface.

Categorizing cognitive load

In my research, I've been particularly impressed by CrewAI's approach to handling different types of cognitive load. They've broken it down into a framework that, while I haven't implemented it myself yet, feels intuitive.

| Context type | Cognitive challenge | Strategic imperative |

|---|---|---|

| Short context | Think quick customer service interactions, transactional, immediate, with minimal memory needed. | Focus: Here, it's all about optimizing that minimal viable prompt for speed and precision. |

| Long context | Imagine a complex troubleshooting session, sustained dialogue where historical context matters. | Persistence: This is where we need smart memory management and dynamic retrieval. |

| Very long context | Picture a weeks-long project discussion, the really heavy lifting. | Delegation: This is where those fascinating sub-agent architectures come into play. |

I'm particularly excited about exploring that last category, the idea of breaking down complex cognitive tasks into smaller, manageable chunks through sub-agents feels like it could be a game-changer.

Defining the Agent's self

Reading Udara's work on Affordance got me thinking about how we define an agent's identity. The initial context isn't just a configuration, it's more like defining the agent's sense of self: what it can do, how it should behave and most importantly, how it understands its role. The AI Design Guide suggests we should approach these models not as simple functions, but as colleagues. That perspective resonated with me as a designer, after all, we're not just programming tools, we're designing personalities.

From what I understand, creating a coherent AI colleague requires three key elements: Persona, Constraints and Resources. It's fascinating how similar this is to onboarding a new team member, you need to define their role, set clear boundaries and provide the tools they need to succeed.

Every.to talks about something that really clicks with me, the "vibe check." It's not just about making the AI sound human; it's about crafting a consistent personality that feels authentic and trustworthy. When we get the Persona and Constraints right, the AI shifts from sounding like, well, an AI, to communicating like a helpful, knowledgeable expert in their domain.

Here's an example that the structure makes intuitive sense; architecting the Tier 2 technical support Agent's identity:

(The Persona) You are a polite, helpful and highly knowledgeable Tier 2 Technical Support Agent for "ElectraTech." Your primary goal is to diagnose and resolve technical issues...

(The Constraints), All warranty claims are valid for 1 year from the purchase date. , Do not promise a refund. Only offer replacement or store credit.

Looking at this framework, I can see how it could provide the high-signal context needed to anchor the agent's behavior. It reminds me of the best support interactions I've had, where the agent was both technically competent and empathetically aligned with their role. The structured approach to defining identity seems like it could help create that same level of consistency in AI interactions.

Embodied intelligence

One of the most fascinating concepts I've encountered in this space is how an AI agent actually interacts with its environment. Just like we humans need our senses and motor skills to engage with the world, AI agents need their own way of perceiving and acting, through tools like databases and APIs. These are what we call Resources in the prompt design world and from what I can see, the way we engineer these interactions could make or break the agent's effectiveness.

There's this principle that keeps coming up in my research, the Just-in-Time (JIT) approach. Think about how overwhelming it would be if someone dumped their entire database of knowledge on you at once. Instead of that cognitive overload, what if we gave AI agents just the tools they need, when they need them? Simple, clear functions like check_warranty_status(serial_number) or get_device_manual(model_id). It's an elegant solution I'm eager to see in action.

| Anti-Pattern (Cognitive bloat) | JIT Approach (Cognitive efficiency) | Rationale |

|---|---|---|

| Pre-loading the entire knowledge base of product manuals and warranty information into every customer interaction. | Providing focused tools like check_warranty_status(serial_number) that fetch just the relevant warranty details for a specific device. |

The support agent only loads the specific warranty information when needed, keeping the interaction focused and efficient. |

You know what this reminds me of? The Occam's Razor principle. As a designer, I've always appreciated how this UX law pushes us toward simplicity. And here we are, applying the same principle to AI architecture, the simplest toolset that gets the job done is often the most effective. It's one of those principles that seems to hold true whether we're designing for human users or AI agents.

The long game

Let's talk about one of the biggest challenges in technical support: maintaining context across long, complex troubleshooting sessions. These Long-Horizon Tasks might span hours or even days and from what I understand, our AI support agents need specialized memory systems to maintain their understanding of the ongoing issue and their identity as a helpful expert throughout the process.

Compaction (synthesis as survival)

Imagine a complex router troubleshooting session that's been going on for hours. The customer has tried multiple solutions, various settings have been checked and several diagnostic tools have been run. When all this information starts approaching the context limit, that's where Compaction comes in. It's like creating a smart case summary, preserving crucial details like "Customer already tried power cycling" or "WiFi works but Ethernet doesn't" while trimming away the redundant back-and-forth.

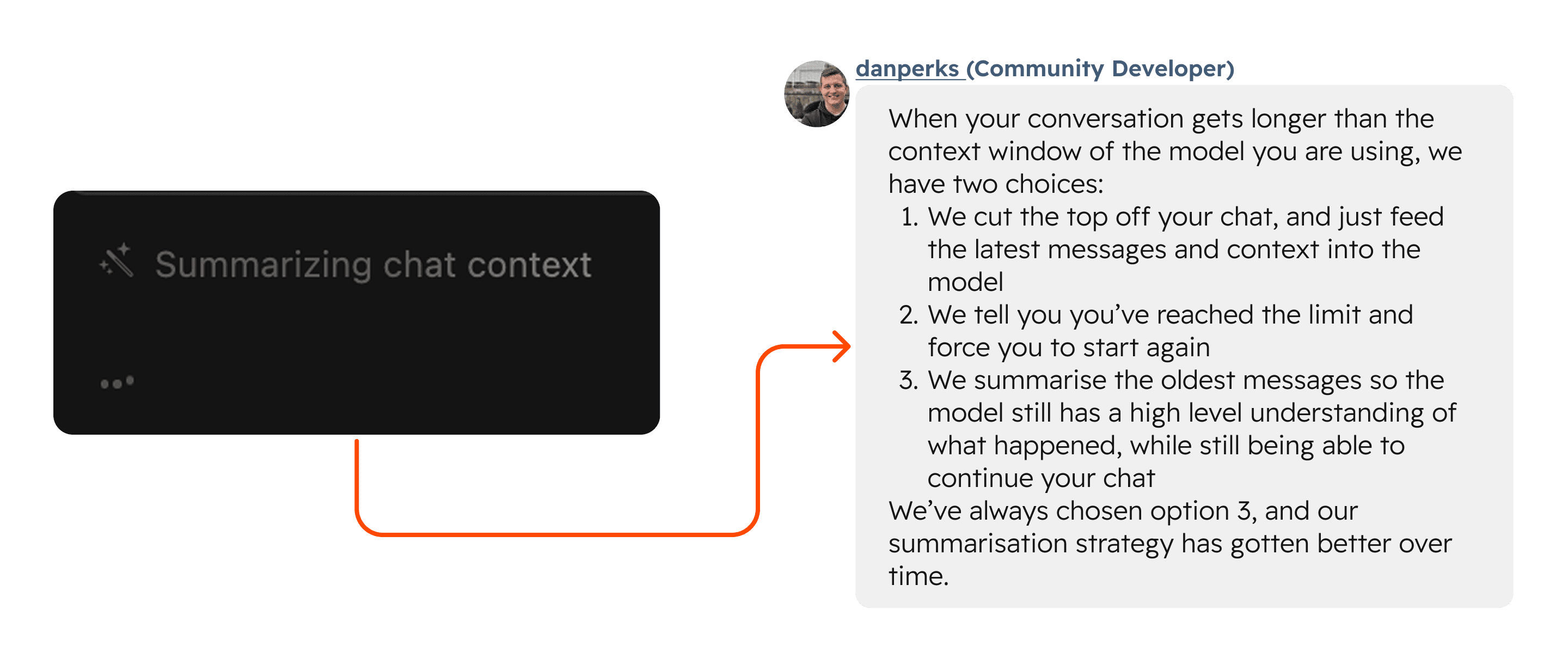

I recently saw this in action with tools like Cursor [Documentation] [Forum], where the visual "Summarizing chat" cue signals that the agent is doing exactly this kind of synthesis. It's fascinating to watch, the agent isn't just forgetting randomly; it's strategically preserving the intent and key findings while letting go of the step-by-step details that are no longer relevant.

Structured note-taking (soft-skills framework)

Think about how human support agents maintain case notes. They don't just rely on chat logs, they keep structured notes about key findings, attempted solutions and next steps. From what I've seen in the documentation, AI agents could benefit from a similar system, a persistent external ledger that acts like their personal case management system.

"I tend to think of this stuff as a 'soft-skills' framework for AI. While the memory system isn't geared toward acting as a database, it tells the agent who, what and where the agent is." (Udara)

Systems like CursorRIPER.sigma employ dedicated Memory Bank Files. Imagine an AI support agent writing notes like: "Device Serial: RT300-445XA, Issue: Intermittent connectivity, Last Action: Initiated firmware rollback, Next Step: Verify network settings post-rollback." These structured notes ensure that even if the agent needs to "refresh" its context, it maintains continuity in the support journey.

Sub-Agent architectures (delegated intelligence)

Here's where things get really interesting. In complex support scenarios, sometimes you need to bring in specialists. In the human world, we escalate to Tier 3 support or consult with specific product teams. The concept of Sub-Agent Architectures suggests we could do something similar with AI, having specialized agents handle specific aspects of complex problems.

I've been particularly intrigued by CrewAI's hierarchical process. Imagine our main support agent as a Manager LLM that maintains the big picture of the customer's issue. When they need to dive deep into specific product documentation or investigate complex compatibility issues, instead of getting bogged down in thousands of technical documents, they could delegate that research to a specialized "Research Agent".

For example, while troubleshooting our Smart Thermostat connectivity issues, the main agent could maintain focus on the customer interaction while delegating the deep technical research to a specialized agent that combs through firmware release notes, known issues databases and compatibility matrices.

The Coherence Loop: What I find clever about this approach is how the Research Agent doesn't dump all its findings back to the main agent. Instead, it might return just the essential insight: "Firmware version 2.4.6 has a known WiFi chip conflict with router model RT-300." This keeps the main agent's context clean and focused on solving the customer's problem.

This sub-agent approach feels like it could be a game-changer. Google Research's recent work on personal health agents provides a fascinating real-world example of this approach in action. Their implementation of multiple specialized agents working together to handle complex health queries demonstrates exactly what I'm excited about, how hierarchical delegation can maintain context while managing complexity. It beautifully exemplifies two more principles that I find particularly relevant: Hierarchy and Modularization.

Just as these laws guide us in breaking down complex interfaces into manageable, interoperable components, they're equally powerful when applied to AI architecture. By compartmentalizing complex tasks and maintaining a clean, high-level context, we can ensure the agent's coherence and identity is preserved, even in the face of massive information processing. This hierarchical, modular approach isn't just about technical efficiency, it's crucial for delivering those seamless, "Wow-worthy" experiences that users remember.

Engineering for the "Wow Moment"

Looking at all these pieces, from smart context management to specialized sub-agents, I'm starting to see Context Engineering as something more than just technical architecture. It's about creating AI support agents that can maintain coherent, helpful personalities throughout complex customer interactions. The principles of strategic forgetting, minimal viable toolsets and delegated intelligence aren't just solving technical problems; they're addressing the fundamental challenge of making AI interactions feel natural and effective.

Think about those rare support experiences that leave you thinking "Wow, they really understood my problem and knew exactly how to help." That's what we're aiming for with Context Engineering. It's not just about having the right information, it's about maintaining that golden thread of understanding throughout the entire support journey. As we continue to develop these technologies, I'm excited to see how they'll transform not just technical support, but every kind of AI interaction that requires sustained, meaningful engagement with users.

Final thoughts

As we continue to explore and experiment with Context Engineering, I believe we're just scratching the surface of what's possible. The principles and approaches discussed here aren't just theoretical constructs, they're potential building blocks for creating AI interactions that feel natural, coherent and truly helpful.

For designers working in AI, our challenge is clear: we need to think beyond traditional UX paradigms and consider how these context engineering principles can help us create experiences that maintain meaningful, continuous relationships with users. The technical foundation is there, now it's up to us to shape it into something remarkable.